Over the last couple of years, I've been pretty skeptical about AI coding tools, and candidly, AI in general. There’s been a lot of hype, with claims about AI replacing human engineers and writing perfect code without human intervention.

I still see serious limitations to what these tools can do — they're much less of a replacement for software engineers, and much more a powerful tool that amplifies their productivity. I've found them to be genuinely amazing at certain tasks, particularly when building simple new tools from the ground up or accelerating routine coding work. They excel at generating boilerplate, helping with API interactions, and suggesting patterns that might take me hours to research and recall after being away from daily coding.

If you already know how you’d go about building something, AI can take care of a lot of that work much faster than you will on your own. With the help of Claude Code, I've started making meaningful technical contributions to one of our most important new projects, and I’m not going to lie, I’m having a lot of fun doing it.

I figured I’d take a few minutes to share my thoughts on both this personal AI journey, as well as our engineering team’s philosophy around the use of AI in development. My hope is that the piece helps our customers better understand the balance we seek to strike between AI-enabled improved efficiency and human-driven guardrails. Plus, you might just find a few new prompts and workflows to bring back to your engineering team.

Using AI to Test AI

At FOSSA, we're currently working on a new project that utilizes AI agents to help developers with dependency upgrades. One component of this is testing and optimizing how we use LLM API "tool calls" to make these agents more effective. If you're not familiar with tool calls, they're a way for AI models to interact with external systems through defined function interfaces, and require a more complex interaction where the LLM requests tool invocations from the caller and expects to be updated with the tool results before finalizing its response.

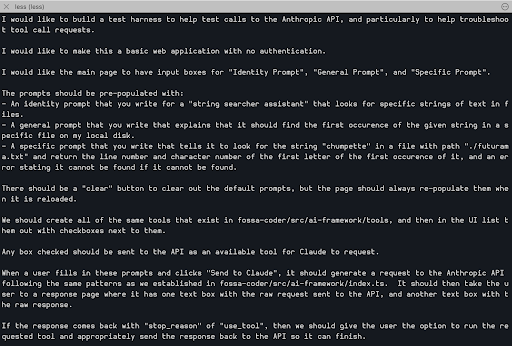

Getting this right was going to require a lot of trial and error, and I wanted to be able to quickly and easily hit the Anthropic API with a variety of requests, see the raw responses coming back, and learn how to properly respond to tool calls, as well as what to avoid. Here’s the post I made to our “learn about AI engineering” Slack channel in the hopes of building excitement about these tools.

I wanted to share a recent experience that demonstrates what these tools can enable for someone who hasn't been coding regularly. Yesterday, I got involved in a hands-on manner with our auto-updater project — something that would have been much more challenging without what I call "Chat-Oriented Programming" (CHOP) tools like Claude Code.

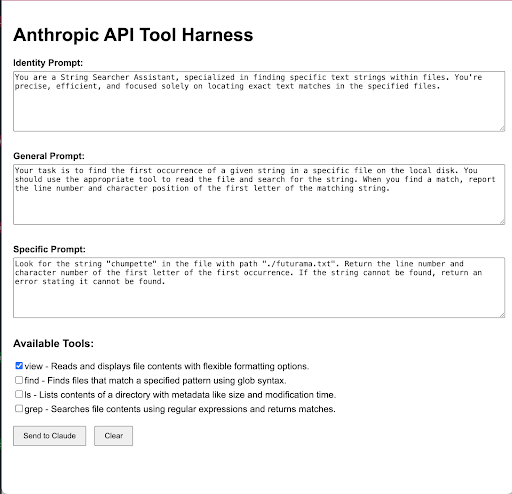

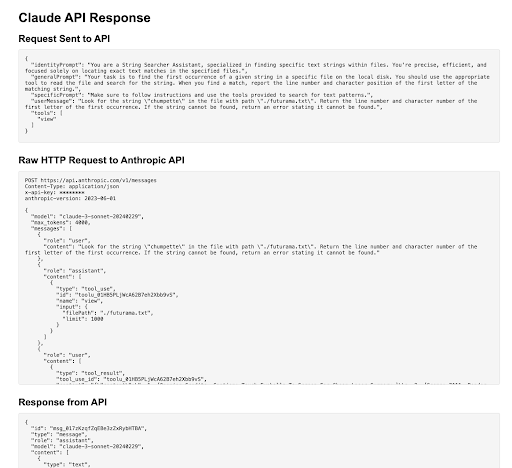

I was working with Chad and team on implementing and optimizing tool calls from the LLM, and we needed to select and inspect requests and responses to better understand and properly respond to these tool calls. I thought a simple web UI that would let us experiment with this functionality, while borrowing code from our actual "fossa-coder" project, would be helpful.

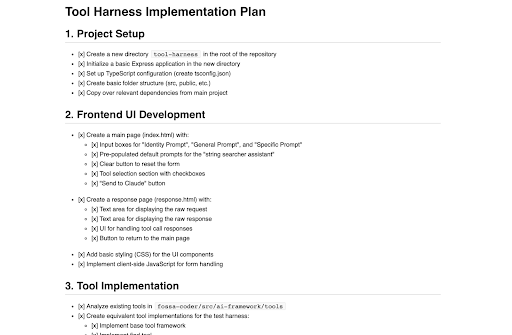

I started by writing up detailed instructions for Claude about what I wanted to build. Claude responded with a step-by-step plan for creating this tool. From there, I asked Claude to implement each step one at a time. It would write code, explain what it did, and then ask if I wanted to move to the next step. Altogether, it took Claude maybe 5-10 minutes to build the initial version of everything.

This is where things got a bit more complex. We ran into a few small bugs that needed to be fixed, and when I tried to add tools to the interface, I kept getting errors from the API. But here's where the process stayed productive — I could copy-paste the errors to Claude, which would then try to fix the issues. Claude even added debug logging for me so I could capture more information about the problems, which I could then feed back to get better solutions.

Claude did struggle with getting the request format right for the available tools schema, but some of that was due to inconsistencies in how we had implemented our own tools that it was trying to base its code on.

The remarkable part is that in about 90 minutes total, I went from nothing to having a functional debugging UI that's actually useful for our team. And I was even able to write most of this post while Claude was working on code generation and fixes.

Finding the Right Balance Between Hype and Utility

It's human nature to be apprehensive and skeptical of new technologies, especially when they feel overhyped, and especially when they might seem like a threat to your livelihood. There's still way too much hyperbolic speculation around what LLMs can and will do in the near future. They're not actually thinking, they’re not magic, and they can’t figure out what to do without someone giving them a lot of direction.

But when given good direction, they sure can do a lot of tedious and time-consuming work effectively in a very short amount of time. I'd encourage every engineering leader to be open-minded and try using these tools to discover for yourself where they're useful and where they fall short. They won't replace your engineering team, but they certainly can help your team accelerate productivity in a meaningful way. The more you and your engineers try out different tools and different ways of using them, the more places you’ll find them to be useful. And if you’re a “post-technical” engineering leader who misses coding but can’t find the time to ramp back up on modern tooling, frameworks, and languages, I implore you to go try building something driven by an AI chat-oriented coding agent. You might be surprised at how good the results are, and you just might have a great time doing it.

FOSSA’s AI Coding Philosophy

This balanced approach to integrating AI in our development workflows has permeated across our engineering organization. We have several foundational philosophies that ensure we maximize AI’s benefit while minimizing undesired side effects.

First and foremost, we don’t lower our code quality standards for production code. We still ensure they adhere to the same style guidelines, test coverage, and clean design principles that we’ve always expected.

We are also very flexible with both the tools and approach used by engineers. All coding projects are a little different from the next, and every engineer thinks through problems a little differently. So we know that the right tool for the job may be different in each case.

For example, a few of our engineers prefer to use LLMs only for one-off tricky problems, such as writing a very complex SQL query or regex. Many of the team members use IDEs with integrated coding agents like Cursor, and still others prefer the more command-center approach that tools like Claude Code provide.

We’ve generally found the tools to be the most useful for tasks that tend to be time-consuming but relatively straightforward, such as generating many permutations of test cases, generating fixture data, straightforward refactors across a large set of files, etc.

That said, we’ve also used these tools to generate and execute plans for larger functionality changes, and we’ve found that our coding standards and review process ensure only high-quality code makes it out to production.

As the department leader, my goal is to ensure we’re maximizing the value of these new tools available to us, and keeping up with the rapidly evolving best practices. I’m still evaluating everything, and plan to slowly build more structure and guidance around how and when the team should be using these tools.

The future shape of professional software engineering still feels a little unclear to me, but I’m quite certain that LLMs will play a large part in it.